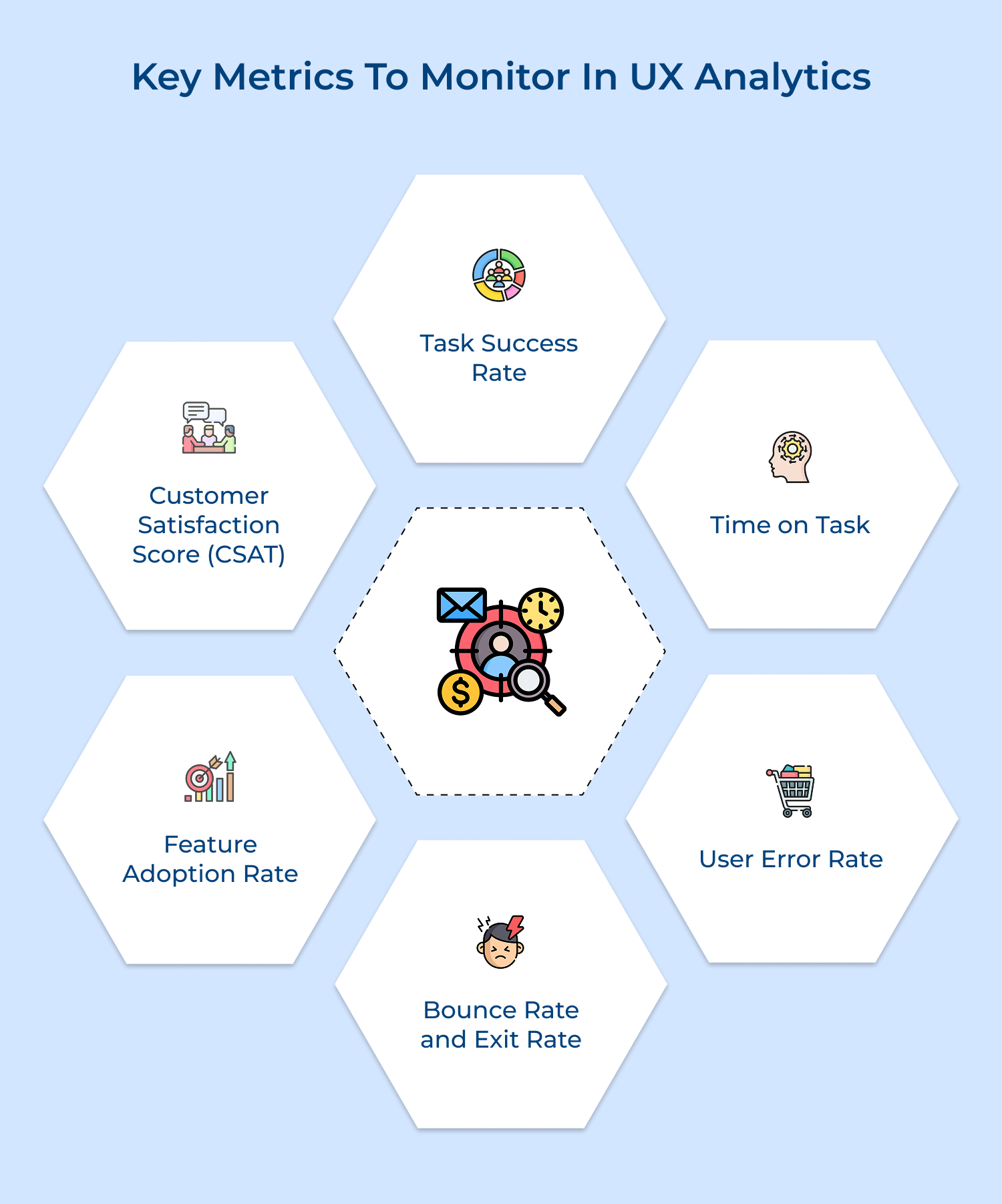

Task Success Rate

This measures how many users complete the task they set out to do, like making a purchase or filling out a form.

A high success rate means your interface is doing its job well. A low one? That usually signals friction, confusion, or poor design. For complex tasks, it’s helpful to break things down step by step to see exactly where users drop off.

Time on Task

How long does it take users to complete an action? Tracking this tells you whether a task is smooth or frustrating.

If the time suddenly spikes, there could be a usability issue or a technical glitch. It’s especially useful to compare time on different devices to uncover mobile-specific challenges.

User Error Rate

When users make mistakes (whether it’s a form error, a wrong click, or a failed submission) it often points to unclear design or confusing flows.

Analyzing common errors can help you clean up those trouble spots and create a more intuitive experience.

Bounce Rate and Exit Rate

If users land on your site and leave without doing anything (bounce) or exit from specific pages (exit rate), it could mean your content isn’t meeting their needs or expectations.

High numbers on key pages are a red flag and worth a closer look.

Feature Adoption Rate

This shows how many users actually use your features. Low adoption might mean people aren’t discovering them or they’re just not helpful.

Tracking adoption trends helps guide rollouts and improvements.

Customer Satisfaction Score (CSAT)

User feedback combined with behavior data gives you the full picture.

Surveys at key touchpoints tell you how users feel, while trends over time highlight where satisfaction is rising—or falling.

Examples of Companies that improved UX with Data-driven Strategies

Curious how data can actually transform UX? These real-world examples show how leading companies used survey responses to improve usability, engagement and results.

Netflix’s Personalization Engine

The streaming giant used extensive viewing behavior data to improve their recommendation system. Analysis of user watching patterns, pause points and content engagement helped refine their interface as well as personalization algorithms.

Their continuous A/B testing led to improved thumbnail selection, resulting in a 20-30% increase in user engagement. The data-driven interface improvements saved the company an estimated $1 billion in annual customer retention.

Airbnb’s Search Experience

The platform analyzed user search patterns and booking behaviors to optimize their search interface. Studying millions of user interactions revealed that travelers often struggled with location-based searches.

The company implemented a more intuitive map-based interface and smart filters, leading to a 12% increase in booking conversions. They also optimized their mobile experience based on touch-pattern analysis.

Spotify’s Discover Weekly

Feature In-depth analysis of listening patterns and user behavior led to the creation of their highly successful personalized playlist feature.

Data showed users were spending significant time creating custom playlists, leading to the development of automated, personalized recommendations. The feature has become one of their most popular offerings with over 40 million users engaging weekly.

LinkedIn’s Profile Completion

Analysis of user engagement patterns showed profiles with higher completion rates led to better networking outcomes.

The company implemented a profile strength meter and guided profile completion process. These data-driven changes resulted in a 55% increase in profile completeness and higher user engagement rates.

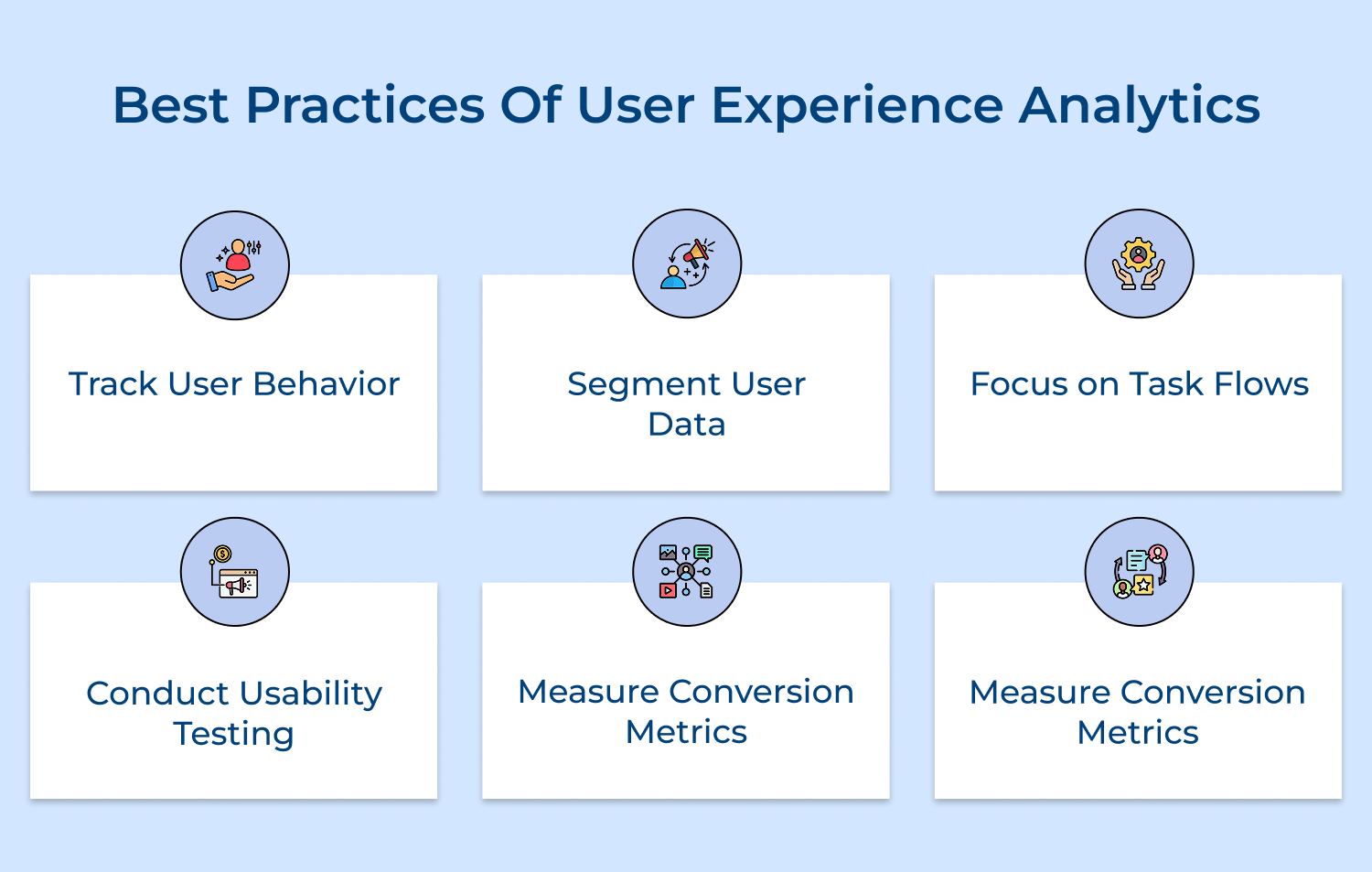

Best Practices of User Experience Analytics

To get real value from UX analytics, you need more than just data, you need the right approach. These best practices help you turn raw numbers into meaningful user improvements.